1. Introduction

As small Large Language Models (sLLMs) continue to revolutionize AI applications, efficiently running these models on edge platforms has become a key technical milestone. In this article, we demonstrate how to deploy and optimize DeepSeek-R1, a representative sLLM, on Advantech’s EPC-R7300, which is powered by NVIDIA® Jetson Orin™, by using Advantech’s Edge AI SDK.

The EPC-R7300 delivers high performance in a compact, power-efficient design, making it ideal for advanced AI inference at the edge. This guide outlines the setup process, key considerations, and performance insights to help developers accelerate sLLM deployment on embedded systems.

2. Prerequisites

Necessary Hardware:

You need to have: EPC-R7300 with a keyboard/mouse and HDMI monitor. Equipped with NVIDIA Jetson Orin Nano Super, 128GB NVMe SSD, running JetPack 6.2 with the following features:

- Compatible with NVIDIA Jetson Orin™ Nano Super module, delivering 40~67 TOPS of AI performance

- Industrial and expandable design

- HDMI up to 3840 x 2160 @60Hz resolution

- 2 x GbE LAN, 2 x USB3.2 Gen 2

- 1 x Nano SIM slot

- 1 x M.2 3042/52 B-Key Slot, 1 x M.2 2230 E-Key Slot, 1 x M.2 2280 M-Key Slot

- Supports Gemma3, DeepSeek R1, Qwen, Llama

Necessary Software:

Advantech Edge AI SDK is required.

Benefiting from the Advantech Edge AI SDK, which saves significant installation time, we will now jump through how to run the Edge AI SDK on the EPC-R7300 with DeepSeek-R1.

Enabling natural language conversations, mathematical reasoning, and a wide range of professional applications on your own device in just a few steps. Whether you’re an engineer or a system integration partner, getting started is simple and intuitive!

3. Click the Edge AI SDK icon on the desktop

Launch the Edge AI SDK and provide the user information:

Accept the End-user License Agreement:

It will send the token to the email. Enter the token from the email you provided:

Edge AI SDK setup complete:

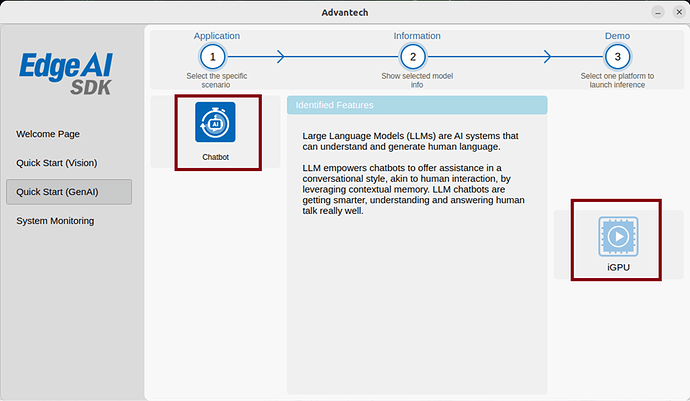

Select Quick Start (GenAI): Select Chatbot and iGPU

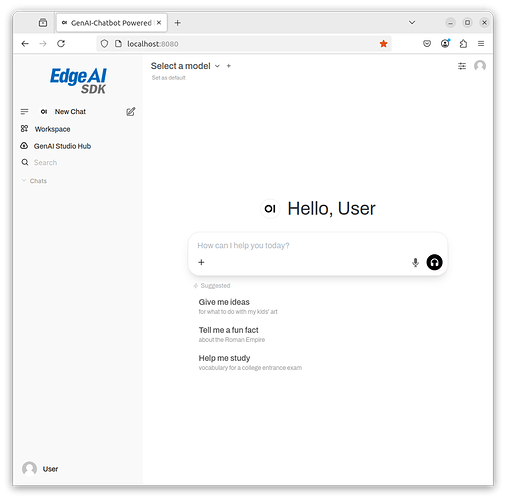

It will launch a GenAI-Chatbot - with a EdgeAI SDK page on a browser

4. Demo for running model

Support Ollama model on Ollama.com: Ollama Search

- Download deepseek-r1:1.5b and qweb2.5:2b model

Search deepseek-r1 model on the Ollama.com:

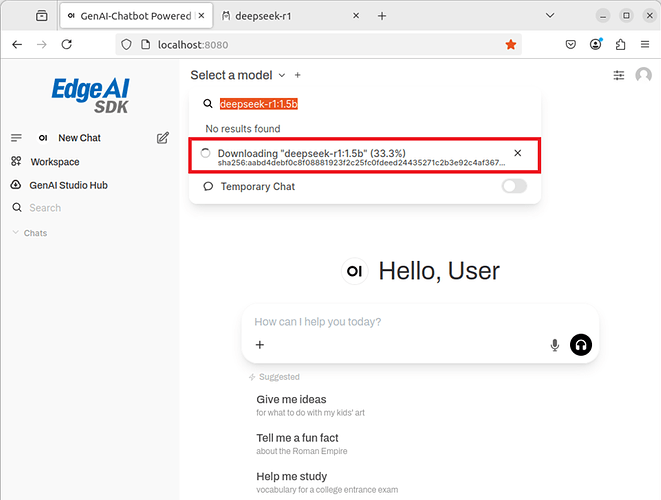

Example use deepseek-r1 thinking model, copy the deepseek-r1:1.5b model name

Click the drop-down list beside the Select a model. Past the model copied from the Ollama model, then select Pull “deepseek-r1:1.5b” from Ollama.com.

It will start to download the model

After the model finishes downloading, select the model you downloaded from the drop-down list. The example here is the deepseek-r1:1.5b model.

Now it switches to the deepseek-r1:1.5b and can start to chat with the model. Just like chatting with others LLM interfaces.

- Download gemma2:2b model:

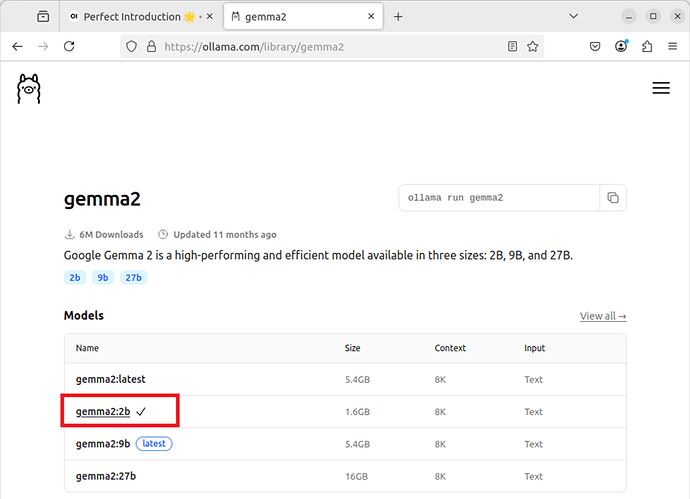

Search gemma2 model on Ollama.com:

Example: use gemma2 model, copy the gemma2:2b model name

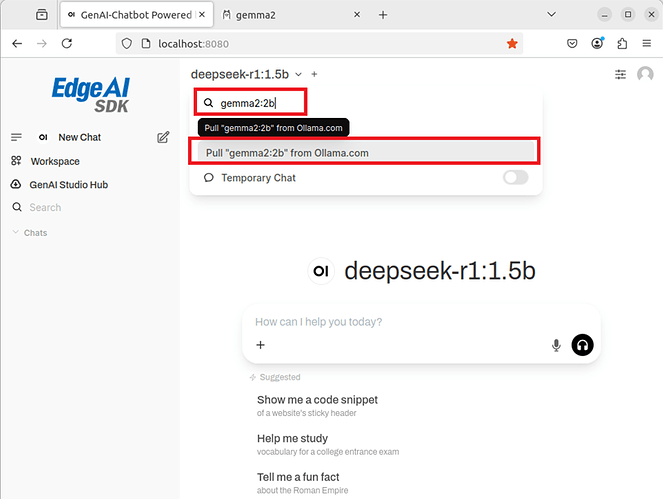

Click the drop-down list beside the Select a model. Past the model copied from the Ollama model, then select Pull “gemma2:2b” from Ollama.com.

It will start to download the model

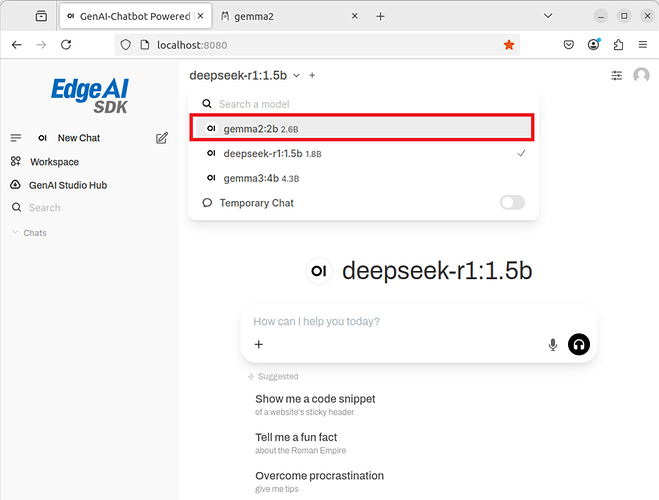

After the model finishes downloading, select the model you downloaded from the drop-down list. The example here is gemma2:2b.

Now it switches to the gemma2:2b and you can start chatting with the model. Just like to chat with others LLM Robot.

5. Run Results

Demo video:

(1) Screencast gemma2:2b sLLM run results, without thinking:

(2) Screencast deepseek-r1:1.5b sLLM run results, with thinking:

After completing the setup, we successfully demonstrate the feasibility of running small Large Language Model (sLLM) DeepSeek-R1 on Advantech’s edge platform powered by NVIDIA Jetson Orin Nano Super.

Compared to traditional cloud-dependent approaches for LLM deployment, running sLLMs on Advantech’s edge platform offers significant advantages: reduced latency, enhanced data privacy, lower network dependency, and the potential for long-term cost savings.

6. Conclusion

Advantech Edge AI SDKs empowers developers to efficiently deploy and run small Large Language Models (sLLMs) like DeepSeek-R1 on edge platforms. By combining high-performance computing from the EPC-R7300 with an optimized software environment, the SDK streamlines the setup process and accelerates AI application development. Developers can take full advantage of Jetson Orin’s capabilities without complex configurations, enabling faster prototyping and deployment. Advantech’s integrated hardware-software solution breaks development boundaries, and help you create smarter and more efficient edge AI systems.

7. Supplementary

Beyond DeepSeek and Gemma, the EPC-R7300, powered by the NVIDIA® Jetson Orin™ Nano and Super platforms, supports a wide variety of sLLM models, allowing developers to flexibly choose based on their project requirements.

Below are performance reference data for the EPC-R7300 with popular sLLM models, including Llama, Qwen, Phi, and SmolLM2, demonstrating its excellent versatility and performance. These figures not only prove the powerful computing capabilities of the EPC-R7300 but also provide a valuable reference for developers in model selection and optimization.