On December 17, 2024, NVIDIA’s CEO, the leather-jacketed Jensen Huang, unexpectedly dropped a video on YouTube. In a playful twist, he emerged from his kitchen oven holding a “cake” and introduced it as the brand-new AI machine—the Jetson Orin Nano Super Developer Kit. He claimed it delivered 1.7x performance improvement, featuring CUDA, cuDNN neural networks, and a new architecture capable of handling robotics and large language models.

The Specifications of the So-Called “New” Jetson Orin Nano Super

-

6 Core ARM Cortex78AR

-

1024 CUDA

-

32 Tensor

-

8GB 128-bit LPDDR5

At this point, my inner Internet Conan (Sherlock Holmes of the web) smelled something suspicious. Looking at the specs, weren’t they exactly the same as the Jetson Orin Nano without the “Super” that I already had?

It didn’t take long for someone to raise the question on NVIDIA’s official forums:

“Does this mean there is no hardware difference? It’s just a BSP/software performance improvement?”

NVIDIA’s response?

“Yes, the existing Jetson Orin Nano Developer Kit can be upgraded to Jetson Orin Nano Super Developer Kit with this software update.”

And just a few days ago, this exact question was added to NVIDIA’s official FAQ.

Source: NVIDIA Official Website

In other words, this so-called brand-new Jetson Orin Nano Super is identical to the previous Orin in terms of hardware. The 1.7x performance boost is entirely achieved through a software update. If you already own the older Orin, you just need to install the new software, and voilà—you’ve got yourself the “Super” version. No need to buy a new device just for that performance bump. From now on, all new Jetson Orin Nano units will be shipped in Super mode—there will be no more “plain” Nano versions.

So, was Jensen Huang misleading us by calling it “All Brand New”? Technically, yes—it’s not a new architecture, just the same old thing repackaged. You might think this is a case of false advertising, right?

Wrong.

Here’s where things get interesting: while the Jetson Orin Nano Super delivers improved performance, the price has dropped from $499 (~TWD 16,400) to $249 (~TWD 8,200). And that, my friends, is what real value looks like for consumers.

The Advantage of Orin Nano Super

So, how does an old hardware framework achieve a 1.7x performance boost through software alone? And another burning question—can an 8GB tiny machine suddenly become a powerhouse just because of some optimized software?

In this article, let’s tackle the first question: Where does the extra performance in Super Mode actually come from?

The possible answer? Increased power and overclocking.

By comparing two different JetPack software versions, the previous version 5.1 and the Super version 6.1, we notice a key difference. The older version had a maximum power mode of 15W, while the Super version introduces a new MAXN mode, which goes beyond 15W. According to NVIDIA’s official data, MAXN mode pushes power consumption up to approximately 25W.

So, what happens when we switch to MAXN mode? Let’s find out.

Super Mode: Unleashing More Power

The chart below shows the operating data after switching to MAXN mode. The results are clear:

- CPU clock speed jumps from 1.5GHz to 1.7GHz.

- Memory frequency increases from 2.1GHz to 3.2GHz.

- Most importantly, the GPU frequency, which directly impacts AI computing, climbs from 635MHz to 1020MHz.

This is the core concept behind Super Mode—by squeezing more power out of the hardware, AI performance sees a significant boost. Based on official data, both integer and floating-point neural network computations improve by roughly 70%.

Now, if you’re thinking: “What if I don’t have a Nano? Does that mean I can’t ‘wear the red underwear’ and enter Super Mode?”

Well, surprise! Orin NX also has a Super Mode, and its performance increases by nearly 50%. But—(and we all hate that ‘but’)—unlike Nano, NX didn’t get a price cut. It remains at its original price. As for the highest-end Orin AGX, there’s still no sign of a Super Mode update. Whether it will get its own “red underwear” remains to be seen in future updates.

Unboxing the Jetson Orin Nano

Now, it’s time to unveil the highlight of this article: Advantech, a globally renowned industrial computer manufacturer, has provided me with a Jetson Orin Nano (EPC-R7300IF-ALA1NN) for testing. This Nano unit comes pre-configured in Super Mode. Moreover, to align with the growing trend of AI applications across various industries, it is currently available at a special discounted price. Let’s proceed with the unboxing.

Unboxing and Initial Impressions

Upon opening the package, the first thing that stands out is how different this device is from standard development boards. Advantech’s industrial-grade craftsmanship ensures that the device is encased in a robust protective shell, designed to withstand the harsh conditions of industrial environments.

Design and Hardware Features

Removing the top heatsink reveals its solid and sturdy construction, weighing 780g, indicating high-quality materials. This unit does not require a cooling fan, eliminating the risk of fan failure.

Front Panel

- SIM card slot & USB OTG

- This allows the device to be upgraded to a 5G LTE network for internet access, making it a great solution for factories without Wi-Fi.

- This allows the device to be upgraded to a 5G LTE network for internet access, making it a great solution for factories without Wi-Fi.

Rear Panel

- Dual Ethernet ports

- HDMI output

- Two USB 3.0 ports

- Industrial power connector

The internal design also demonstrates Advantech’s attention to detail. It includes pre-installed slots for Wi-Fi, 5G LTE, and SSD modules, ensuring easy expandability for a wide range of application scenarios.

After booting up, the system status screen shows that the system has been pre-configured in Super Mode from the factory. The image below displays the system status, where the MAXN option is also visible.

Jetson Orin “Super” Performance Boost Testing

Earlier, we discussed how the Jetson Orin series can achieve significant performance improvements through Super Mode (MAXN). Now, let’s put it to the test and see how much the performance actually improves.

For this experiment, we will use one of the most popular LLMs (Large Language Models) – Llama-3.2. The performance comparison will be conducted across three power modes:

- Super Mode (MAXN)

- 25W Mode

- 15W Mode

Since I no longer have access to a traditional Jetson Orin model, I will use the 15W mode as the baseline for comparison.

Why Use Llama-3.2 for Edge Device Testing?

We chose Llama-3.2 because it offers lightweight 1B and 3B versions, optimized specifically for low-power devices and mobile applications. This makes it ideal for inference testing in edge computing environments.

These models support up to 128K context length, allowing them to handle longer instructions and data inputs while maintaining high inference efficiency.

Additionally, Llama’s architecture is optimized for embedded systems and other low-power hardware, enabling us to evaluate the device’s computational power, memory usage, and power efficiency in real-world applications.

By testing Jetson Orin with Llama, we can not only assess its AI processing capabilities but also verify its stability and performance limits in resource-constrained environments.

Step 1: Setting Up the Testing Environment

First, we install Ollama, an open-source software platform that enables local execution of LLMs without relying on cloud services. It offers powerful functionality while maintaining ease of use, supporting various open-source models such as Llama 2, Mistral, and CodeLlama. We will use Ollama to run the LLaMA 3.2 large language model suite as a basic chatbot. The installation command is as follows:

curl -fsSL https://ollama.com/install.sh | sh

From the installation process above, we can see that Ollama automatically detects the system environment during installation and downloads the corresponding version. For example, if it detects JetPack 6, it will download the appropriate components.

Once the installation is complete, you can specify the model to run. In this case, we are running Llama 3.2 with the following command:

ollama rum llama3.2

Once execution starts, the system prompts with >>>, allowing us to interact with the AI. For this test, I asked a question about buying an electric vehicle, and the AI provided a comprehensive response.

Now, let’s move on to performance evaluation—is NVIDIA CEO Jensen Huang’s claim of a 1.7x performance boost real? Let’s find out.

Step 2: Performance Testing

As mentioned earlier, we will test three different power consumption modes to simulate Super Mode and Traditional Mode, and then compare the differences. Although this is an informal test, we ensure fairness by following these guidelines:

- Rebooting is required when switching modes to prevent AI response caching issues.

- Each mode will be tested with five questions, covering both simple and complex topics. The average result will be calculated to avoid biases caused by a single question.

List of test questions:

- hello world

- Who are you

- How about electric car

- What is CSS

- How about nuclear power plant

To measure Ollama’s performance, we append --verbose to the execution command:

ollama rum llama3.2 --verbose

Each response provides four key performance metrics:

- Total Duration (AI processing and response time)

- Load Duration (Model loading time)

- Prompt Evaluation Rate (Token processing speed during input)

- Inference Speed (Token processing speed during response generation)

Performance Test Results

Below is the test result summary (average of five questions):

Metric 1: Total Duration

At 15W, the average response time for the five questions was 56 seconds, while at 25W, it was reduced to 42 seconds. In Super Mode (MAXN), the highest performance mode, the response time was less than 20 seconds.

The calculated performance improvements are:

- 25W: 25.41% increase

- MAXN: 65.41% increase

This result roughly aligns with the claimed 1.7x performance boost.

Metric 2: Load Time

The difference in load time is minimal, but it is still evident that the performance of MAXN and 25W is superior to the 15W traditional mode .

Metric 3: Prompt Evaluation Speed

An unusual phenomenon can be observed in this metric: although 25W outperforms 15W , MAXN significantly lags behind . This could be due to a small sample size or other underlying issues. Additionally, prompt evaluation tasks are generally not complex, and they do not consume many tokens , making this metric less critical compared to Metric 4.

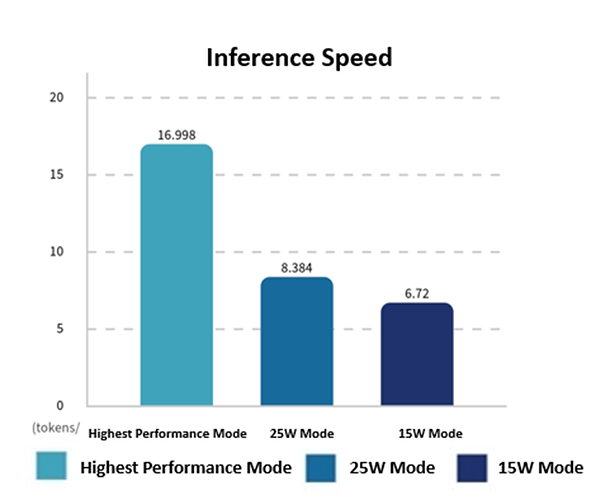

Metric 4: Inference Speed

Inference speed is arguably the most important evaluation metric for an LLM system, as this is where the heaviest computational workload occurs.

- MAXN mode demonstrates a significant lead in this category.

- 25W mode offers only a slight improvement over 15W mode.

- The performance improvements are calculated as follows:

- 25W: 24.7% increase

- MAXN: 152.98% increase

This aligns closely with the 1.7x performance boost claim.

Performance Test Summary

The Super Mode (MAXN) of Jetson Orin has indeed demonstrated a significant performance boost. This study conducted real-world tests using the Llama 3.2 language model, comparing inference performance across three power modes: 15W, 25W, and MAXN.

The test environment utilized Ollama as the LLM execution platform, with Llama 3.2 chosen for its lightweight design, making it well-suited for edge computing applications. The test methodology involved five fixed questions, and performance was measured using four key metrics:

- Total Duration

- Load Time

- Prompt Evaluation Speed

- Inference Speed

Key Findings

- In MAXN mode , the total duration was reduced by 65.41% compared to 15W mode.

- Inference speed increased by 152.98% , which closely aligns with NVIDIA CEO Jensen Huang’s claim of a 1.7x performance boost.

- Load time showed minimal variation across modes .

- Prompt evaluation speed in MAXN mode unexpectedly decreased , possibly due to sample size limitations or other influencing factors.

Conclusion

Despite the slight variation in load time and prompt evaluation speed , Super Mode (MAXN) significantly enhances LLM inference performance , making it an ideal choice for high-efficiency AI inference in edge computing applications .

VLM Testing: AI-Powered Vision Language Models

In the final part of this article, we will explore the application of Vision Language Models (VLMs).

VLMs are AI models that integrate image recognition and language understanding, aiming to interpret images like humans—not only recognizing what’s in an image but also understanding its context and converting it into textual descriptions. Unlike traditional object detection models (such as YOLO and Faster R-CNN), which focus on identifying and classifying specific objects within an image, VLMs perform holistic image understanding.

For example, unlike conventional object detection models that rely on visual features to classify objects, VLMs analyze an entire image as a whole and attempt to comprehend scenes, structures, and relationships within the image. By combining language and vision models, VLMs extract higher-level abstract information and generate corresponding descriptions or inferences.

How Humans Process Images

When people look at a picture, they first perceive the overall scene before analyzing the relationships between objects within the image. For instance:

- Scene Recognition – Understanding the general environment of the image, such as a street, park, or restaurant.

- Structural Analysis – Identifying relationships between different elements in the image, such as two people conversing or a dog running on the grass .

- Contextual Reasoning – Not only recognizing objects but also inferring potential actions or contextual meaning from the scene.

Applications of VLMs

VLMs have a wide range of applications, including but not limited to:

- Image Captioning – Generating natural language descriptions based on an image.

- Visual Question Answering (VQA) – Answering specific questions based on a given image.

- Image-Text Matching – Matching or retrieving images based on text queries.

These models are trained on massive datasets, allowing them to integrate visual and linguistic information, ultimately achieving a level of visual comprehension similar to humans.

VLMs Overcoming Traditional Object Detection Limitations

VLMs provide new possibilities beyond traditional object detection techniques.

For example, in 2024, I worked with a client on a project aimed at detecting “suspicious shoplifting behavior” in retail stores. In traditional object detection, this is extremely difficult because it can only track a person’s presence in a specific area or identify objects they are holding. However, defining “suspicious behavior” requires a clear set of behavioral criteria .

The client’s team proposed multiple possible rules for identifying suspicious actions, but after extensive discussion, we ultimately abandoned the project. The reason? Object detection alone was insufficient for assessing behavioral intent.

However, VLM technology offers a potential solution to this challenge. Below, I will introduce some basic VLM applications that demonstrate its capabilities.

VLM Implementation with VILA 1.5

For this experiment, I used NanoVLM’s VILA 1.5 model. The installation process follows the guidelines provided on the Jetson AI Lab website.

VILA 1.5 (Vision-and-Language Pretrained Model) is an advanced vision-language pretraining model. It has been trained on a large-scale dataset of image-text pairs, enabling it to better understand and generate descriptive language for visual content.

Key Innovations of VILA 1.5

- Enhanced Cross-Modal Learning – Strengthens the correlation between images and text for improved accuracy.

- Detailed Contextual Understanding – Allows the model to comprehend fine-grained image details and generate logical language responses based on those details.

To integrate this with real-world applications, I modified the code to allow it to process real-time webcam feeds using OpenCV. The analysis results are directly displayed on the live video stream.

Since my previous projects have focused on image recognition, my first VLM test was designed to analyze traffic congestion using vehicle detection.

Case Studies

1. Traffic Congestion Analysis

Objective: Detect traffic congestion using VLM-based scene understanding.

Prompt: “Describe the traffic condition using a single adjective (e.g., ‘busy’, ‘fine’, ‘empty’).”

Results:

- Sparse traffic → “empty”

- Heavy congestion → “busy”

Test Results:

The recognition performance was very good. We used a webcam to capture real-time road images displayed on a computer screen. The model responded accurately based on the given prompt.

Figure A shows a real-time image of a road. Since the traffic volume in the image is very low, the system classified it as an “empty” state.

Figure B shows a real-time image of a highway . Since the traffic in the image appears congested , the system classified it as a “busy” state.

The reason for having the model respond with keywords is that it allows traffic condition “labels” to be directly stored in the database, eliminating the need for manual interpretation of textual descriptions.

Conclusion:

This test provided valuable insights compared to traditional object detection-based methods. In conventional object detection, traffic conditions are assessed by counting the number of vehicles and applying predefined rules (e.g., more than 10 vehicles = congested, fewer than 5 = smooth traffic).

However, with VLM technology, there is no need to pre-count vehicles. Instead, the model analyzes the entire scene and directly determines the traffic state, introducing an entirely new approach to traffic assessment.

The key difference compared to traditional object detection is that manual rule-setting is still required for vehicle count-based assessments. Additionally, variations in camera angles and locations require manual adjustments for each specific environment.

In contrast, VLM automates the entire process, allowing AI to interpret and process the scene without human intervention, significantly reducing the need for manual rule configurations.

2. Workplace Monitoring

Objective: Detect employee actions using VLM-based behavior recognition.

Prompt: “Identify the worker’s action in the image.”

Results:

- Typing → “The worker is typing on a keyboard.”

- Using a phone → “The worker is holding a cellphone.”

- Sleeping → “The worker is sleeping.”

Test Results:

The recognition performance was very good. We used a webcam to capture various human actions, and the model accurately responded based on the given prompts.

Figure A shows a webcam-captured human action. When a person uses a cellphone in front of the camera, the system correctly identifies the action and immediately provides the prompt:

“The worker is holding a cell phone.”

Figure B shows that when a person is typing on a keyboard in front of the camera, the system correctly identifies the action and immediately provides the prompt:

“The worker is typing on a keyboard.”

Figure C shows that when a person is reading a book in front of the camera, the system correctly identifies the action and immediately provides the prompt:

“The worker in the image is reading.”

Figure D shows that when a person is sleeping with their head down in front of the camera, the system correctly identifies the action and immediately provides the prompt:

“The worker in the image is sleeping.”

Conclusion:

This test provided a new perspective on traditional human recognition methods. In the past, human activity recognition typically required defining body joint points and then analyzing movements accordingly.

However, with VLM, the system directly interprets the image to determine whether an employee is actively working, without the need for additional body feature labeling.

This approach greatly simplifies the process and showcases VLM’s powerful capabilities in visual semantic understanding, making us excited about its future applications.

3. Construction Site Safety

Objective:

Utilize VLM to analyze construction site images and provide relevant safety insights or identify potential hazards.

Prompt:

Analyze the worksite environment and return a status description, such as:

- “The construction area is not well.”

- “The construction area is not clear.”

Since this case involves complex scene analysis, it is not possible to display all the detected elements within the image. Therefore, we have included screenshots of the VLM-generated descriptions for reference.

Image A:

The model returned the prompt:

“The construction area is not well.”

Analysis Results:

- The construction site lacks proper lighting , which may create unseen hazards.

- The area appears to be a construction site with metal scaffolding.

- There are two workers present in the area.

- Both workers are wearing safety helmets .

Image B:

The model returned the prompt:

“The construction area is not clear.”

Analysis Results:

- The construction site is poorly maintained, with visible debris present.

- The worker is not wearing any protective gear, such as a shirt.

- The worker is using a metal pole as a temporary ladder.

Conclusion

This test confirms Jetson Orin Nano Super’s potential in VLM-based AI applications. From human behavior recognition to traffic analysis and worksite safety monitoring, VLM technology enhances image scene understanding beyond traditional object detection methods.

Jetson Orin Nano Super delivers stable performance for real-time AI inference on edge devices, making it a promising platform for smart monitoring, behavioral analysis, and safety management applications.

Conclusion

This test validated the potential of VLM (Vision Language Models) on Jetson Nano Super and demonstrated its strong capabilities in visual semantic understanding.

Whether in human recognition, traffic flow analysis, or construction site safety monitoring, VLM can make direct scene-based assessments without relying on traditional data labeling or feature extraction methods.

As a low-power AI edge computing device, Jetson Nano Super performed stably across these applications, efficiently handling real-time image analysis while enhancing system flexibility and efficiency.

These findings suggest that VLM applications on Jetson Nano Super could be widely adopted in the future for smart surveillance, behavioral analysis, and safety management, making it a promising area for further optimization and development.

Author: Yu Junzhe

An assistant professor, columnist, renowned blogger, and Director of Technology R&D at Diandian Technology. His expertise includes artificial intelligence, multimedia interaction (Unity), smart interactive devices (APP, Arduino), virtual and augmented reality interactions, and IoT development.

Education:

Ph.D. in Information Management from National Sun Yat-sen University.